Introduction

For the past 6 months, Serge Hallyn, Tycho Andersen, Chuck Short, Ryan Harper and myself have been very busy working on a new container project called LXD.

Ubuntu 15.04, due to be released this Thursday, will contain LXD 0.7 in its repository. This is still the early days and while we’re confident LXD 0.7 is functional and ready for users to experiment, we still have some work to do before it’s ready for critical production use.

So what’s LXD?

LXD is what we call our container “lightervisor”. The core of LXD is a daemon which offers a REST API to drive full system containers just like you’d drive virtual machines.

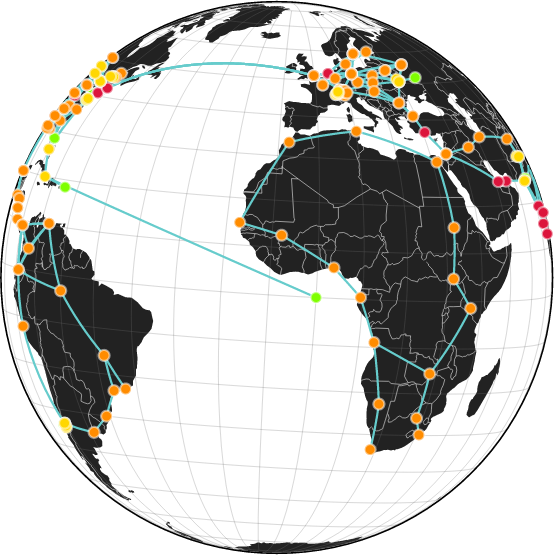

The LXD daemon runs on every container host and client tools then connect to those to manage those containers or to move or copy them to another LXD.

We provide two such clients:

- A command line tool called “lxc”

- An OpenStack Nova plugin called nova-compute-lxd

The former is mostly aimed at small deployments ranging from a single machine (your laptop) to a few dozen hosts. The latter seamlessly integrates inside your OpenStack infrastructure and lets you manage containers exactly like you would virtual machines.

Why LXD?

LXC has been around for about 7 years now, it evolved from a set of very limited tools which would get you something only marginally better than a chroot, all the way to the stable set of tools, stable library and active user and development community that we have today.

Over those years, a lot of extra security features were added to the Linux kernel and LXC grew support for all of them. As we saw the need for people to build their own solution on top of LXC, we’ve developed a public API and a set of bindings. And last year, we’ve put out our first long term support release which has been a great success so far.

That being said, for a while now, we’ve been wanting to do a few big changes:

- Make LXC secure by default (rather than it being optional).

- Completely rework the tools to make them simpler and less confusing to newcomers.

- Rely on container images rather than using “templates” to build them locally.

- Proper checkpoint/restore support (live migration).

Unfortunately, solving any of those means doing very drastic changes to LXC which would likely break our existing users or at least force them to rethink the way they do things.

Instead, LXD is our opportunity to start fresh. We’re keeping LXC as the great low level container manager that it is. And build LXD on top of it, using LXC’s API to do all the low level work. That achieves the best of both worlds, we keep our low level container manager with its API and bindings but skip using its tools and templates, instead replacing those by the new experience that LXD provides.

How does LXD relate to LXC, Docker, Rocket and other container projects?

LXD is currently based on top of LXC. It uses the stable LXC API to do all the container management behind the scene, adding the REST API on top and providing a much simpler, more consistent user experience.

The focus of LXD is on system containers. That is, a container which runs a clean copy of a Linux distribution or a full appliance. From a design perspective, LXD doesn’t care about what’s running in the container.

That’s very different from Docker or Rocket which are application container managers (as opposed to system container managers) and so focus on distributing apps as containers and so very much care about what runs inside the container.

There is absolutely nothing wrong with using LXD to run a bunch of full containers which then run Docker or Rocket inside of them to run their different applications. So letting LXD manage the host resources for you, applying all the security restrictions to make the container safe and then using whatever application distribution mechanism you want inside.

Getting started with LXD

The simplest way for somebody to try LXD is by using it with its command line tool. This can easily be done on your laptop or desktop machine.

On an Ubuntu 15.04 system (or by using ppa:ubuntu-lxc/lxd-stable on 14.04 or above), you can install LXD with:

sudo apt-get install lxd

Then either logout and login again to get your group membership refreshed, or use:

newgrp lxd

From that point on, you can interact with your newly installed LXD daemon.

The “lxc” command line tool lets you interact with one or multiple LXD daemons. By default it will interact with the local daemon, but you can easily add more of them.

As an easy way to start experimenting with remote servers, you can add our public LXD server at https://images.linuxcontainers.org:8443

That server is an image-only read-only server, so all you can do with it is list images, copy images from it or start containers from it.

You’ll have to do the following to: add the server, list all of its images and then start a container from one of them:

lxc remote add images images.linuxcontainers.org

lxc image list images:

lxc launch images:ubuntu/trusty/i386 ubuntu-32

What the above does is define a new “remote” called “images” which points to images.linuxcontainers.org. Then list all of its images and finally start a local container called “ubuntu-32” from the ubuntu/trusty/i386 image. The image will automatically be cached locally so that future containers are started instantly.

The “<remote name>:” syntax is used throughout the lxc client. When not specified, the default “local” remote is assumed. Should you only care about managing a remote server, the default remote can be changed with “lxc remote set-default”.

Now that you have a running container, you can check its status and IP information with:

lxc list

Or get even more details with:

lxc info ubuntu-32

To get a shell inside the container, or to run any other command that you want, you may do:

lxc exec ubuntu-32 /bin/bash

And you can also directly pull or push files from/to the container with:

lxc file pull ubuntu-32/path/to/file .

lxc file push /path/to/file ubuntu-32/

When done, you can stop or delete your container with one of those:

lxc stop ubuntu-32

lxc delete ubuntu-32

What’s next?

The above should be a reasonably comprehensive guide to how to use LXD on a single system. Of course, that’s not the most interesting thing to do with LXD. All the commands shown above can work against multiple hosts, containers can be remotely created, moved around, copied, …

LXD also supports live migration, snapshots, configuration profiles, device pass-through and more.

I intend to write some more posts to cover those use cases and features as well as highlight some of the work we’re currently busy doing.

LXD is a pretty young but very active project. We’ve had great contributions from existing LXC developers as well as newcomers.

The project is entirely developed in the open at https://github.com/lxc/lxd. We keep track of upcoming features and improvements through the project’s issue tracker, so it’s easy to see what will be coming soon. We also have a set of issues marked “Easy” which are meant for new contributors as easy ways to get to know the LXD code and contribute to the project.

LXD is an Apache2 licensed project, written in Go and which doesn’t require a CLA to contribute to (we do however require the standard DCO Signed-off-by). It can be built with both golang and gccgo and so works on almost all architectures.

Extra resources

More information can be found on the official LXD website:

https://linuxcontainers.org/lxd

The code, issues and pull requests can all be found on Github:

https://github.com/lxc/lxd

And a good overview of the LXD design and its API may be found in our specs:

https://github.com/lxc/lxd/tree/master/specs

Conclusion

LXD is a new and exciting project. It’s an amazing opportunity to think fresh about system containers and provide the best user experience possible, alongside great features and rock solid security.

With 7 releases and close to a thousand commits by 20 contributors, it’s a very active, fast paced project. Lots of things still remain to be implemented before we get to our 1.0 milestone release in early 2016 but looking at what was achieved in just 5 months, I’m confident we’ll have an incredible LXD in another 12 months!

For now, we’d welcome your feedback, so install LXD, play around with it, file bugs and let us know what’s important for you next.

Github

Github Twitter

Twitter LinkedIn

LinkedIn Mastodon

Mastodon