The Incus team is pleased to announce the release of Incus 6.14!

This is a lighter release with quite a few welcome bugfixes and performance improvements, wrapping up some of the work with the University of Texas students and adding a few smaller features.

It also fixes a couple of security issues affecting those using network ACLs on bridge networks using nftables and network isolation.

The highlights for this release are:

- S3 upload of instance and volume backups

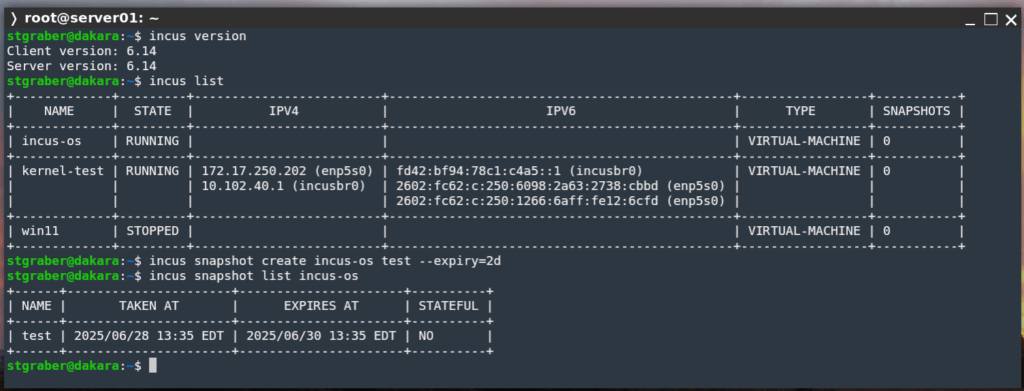

- Customizable expiry on snapshot creation

- Alternative default expiry for manually created snapshots

- Live migration tweaks and progress reporting

- Reporting of CPU address sizes in the resources API

- Database logic moved to our code generator

The full announcement and changelog can be found here.

And for those who prefer videos, here’s the release overview video:

You can take the latest release of Incus up for a spin through our online demo service at: https://linuxcontainers.org/incus/try-it/

And as always, my company is offering commercial support on Incus, ranging from by-the-hour support contracts to one-off services on things like initial migration from LXD, review of your deployment to squeeze the most out of Incus or even feature sponsorship. You’ll find all details of that here: https://zabbly.com/incus

Donations towards my work on this and other open source projects is also always appreciated, you can find me on Github Sponsors, Patreon and Ko-fi.

Enjoy!

Github

Github Twitter

Twitter LinkedIn

LinkedIn Mastodon

Mastodon