It’s now been two months since I left my position at Canonical and went freelance! A lot of things have now all fallen into place to the point where it almost feels like having a normal work routine again 🙂

Kernel and ZFS builds

As mentioned in an earlier post, after over a year of rolling my own kernels and manually installing them on all my systems, I’ve decided to spend a bit of time automating the whole process and putting in place a proper build and publishing pipeline.

The result is mainline kernel builds that are updated and tested weekly, made available as Debian packages for Ubuntu 20.04 LTS, Ubuntu 22.04 LTS, Debian 11 and Debian 12 users. You can find those here:

And because I’m still a ZFS user and need a recent ZFS build to go along those mainline kernels, I’ve also started building up to date ZFS packages here:

I’m now running those on a mix of Intel, AMD and Arm systems from single board computers to large AMD EPYC systems and everything has been very smooth so far!

Incus

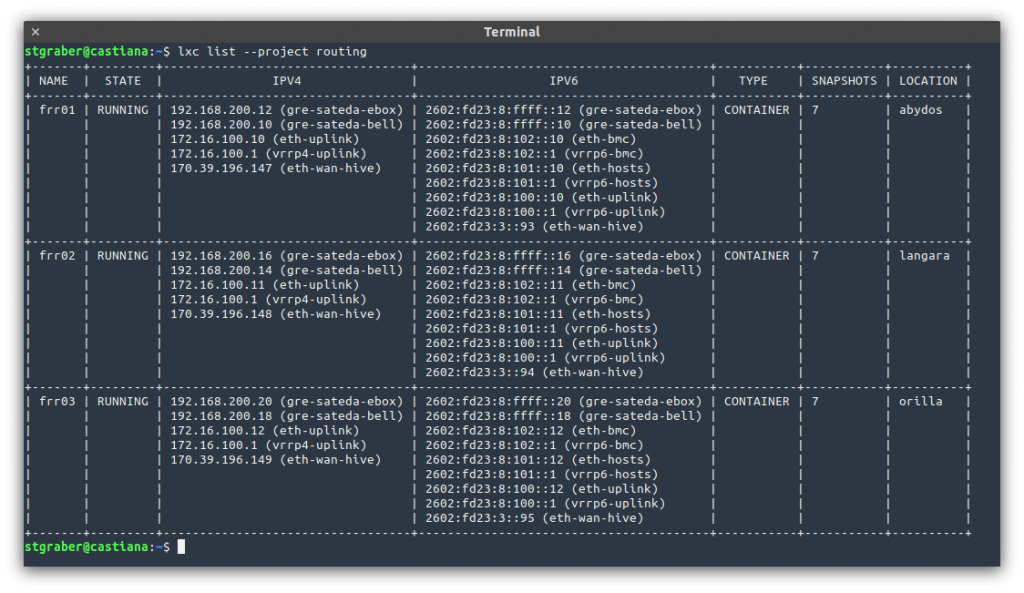

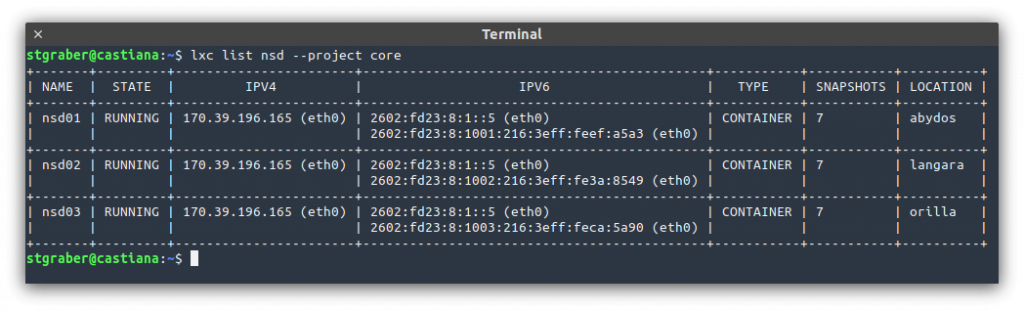

Most of my time has otherwise been spent working on Incus, the community LXD fork.

We’re almost done with the initial set of breaking changes, primarily removing outdated or irrelevant features, making some CLI changes, …

Recently I’ve been focusing on re-organizing the various Go packages in the codebase, trying to get all of those out of the way before I spend any amount of time working on the tooling to import LXD changes into Incus.

And I’ve also been spending some time working on the migration tool to transition users from LXD to Incus. There’s still some work to be done, but we’ve converted a dozen systems or so with minimal additional work needed!

Next up for Incus is going to be some initial packages that will be available for Ubuntu and Debian users, making it a lot easier to automate testing and for early adopters to give it a try!

Currently the hope is for an initial release of Incus towards the end of September or early October.

Youtube

Last month I mentioned that I created a new YouTube channel, though at the time, there was no content on it yet. This has since changed!

I’ve been trying to do a live stream per week, showcasing some of the work going on with Incus. So far, there’s a video showing me manually switching my main desktop machine from LXD to Incus, another on fixing some smaller issues and the last one showcases the creation of the aforementioned LXD to Incus migration tool.

I’ll probably try to keep the weekly live stream going for a little while, at least until we have an initial Incus release out and can work on shorter, more thought-through videos showcasing various aspects of Incus.

Using Debian again

With the work to get my kernel and ZFS builds to be available to both Ubuntu and Debian users, along with the recent release of Debian 12, I’ve decided to give Debian a go on my main desktop machine.

I’ve been an Ubuntu user since 2004 so it had been almost 20 years since I last used Debian for more than a 30 minutes test in a container. Overall, things went pretty smoothly. I don’t really need all that much to have a functional system and found that starting from a minimal installation made it pretty simple to get my system up and running with as few packages and random daemons running as possible.

The bulk of my day to day work happens in VMs, containers and on remote servers, so I’ve not really noticed any visible difference so far. I imported my home directory and all my Incus data from my Ubuntu install and after re-installing all my usual packages, I’ve effectively got a system that feels identical to my old Ubuntu install, minus the nagging to get me to use Ubuntu ESM 🙂

I’m still an Ubuntu Core Developer and I’ll still be using Ubuntu on a number of other machines, but it’s definitely been great to see where Debian got after all these years and I expect I’ll be using it more in the years to come!

Sponsorship

As mentioned last month, I’ve setup a business here in Canada so I can easily handle contract work, consultation, trainings, … And I’m quite happy that I’ve already gotten to do a fair amount of that with quite a bit more expected to come over the next few months!

If there’s some project that you think I may be able to help you with, you can let me know at info@zabbly.com

On top of that, I’ve gotten into the Github Sponsorship program too.

This makes it pretty easy to receive both one-off and recurring contributions from users or organizations that appreciate the open source work I’ve been doing and want to help out!

Conclusion

Two months in, quite a lot has happened, quite a lot has changed from my old day to day, more management-focused work. I’m happy that a normal day for me now involves a lot more working on code, problem solving and interacting with other passionate members of the open source community and a lot less recurring meetings and JIRA updates 🙂

I’m excited about what’s coming over the next few months, especially getting an initial release of Incus out the door and to users as well as some other exciting projects I’m only starting to work on now!

Github

Github Twitter

Twitter LinkedIn

LinkedIn Mastodon

Mastodon