Introduction

A year ago today, my girlfriend and I (along with our cat), moved into our new house. It’s a pretty modern house, on a 1.5 acres (~6000 sqm) piece of forested land and even includes an old maple shack!

Fully moving all our stuff from our previous house in Montreal took quite some time, but we got it all done and sold the Montreal house at the end of July.

The new house has 4 bedrooms and 2 bathrooms upstairs, a massive open space for living room, dining room and kitchen on the main floor along with a mudroom, washroom and pantry, then on the lower level, we got a large two car garage, mechanical room and another small storage room.

That’s significantly larger than what we had back at the old house and that’s ignoring the much larger outside space which includes a large deck, the aforementioned maple shack, a swimming pool, chicken coop and a lot of trees!

Home automation platform

Now, being the geek that I am, I’ve always had an interest in home automation, though I also have developed quite an allergy to anything relying on cloud services and so focus on technologies that can work completely offline.

These days, it means running a moderately complex installation of Home Assistant, along with Mosquitto for all the MQTT integrations, MediaMTX to manage camera feeds and Frigate to analyze and record all the video footage.

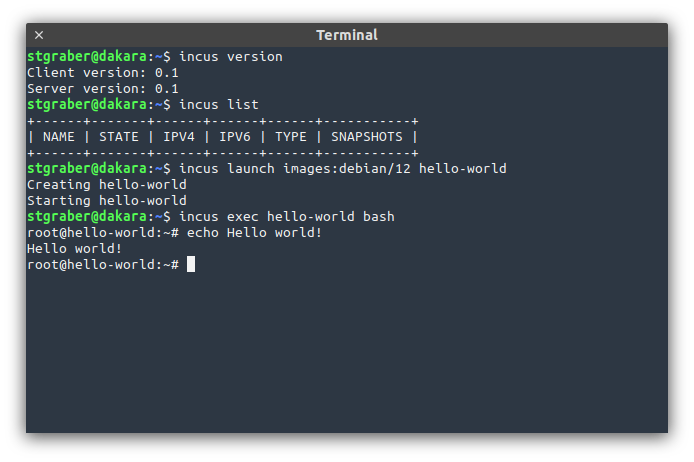

Obviously, all of the software components run in Incus containers with some nested Docker containers for the software components handling my Z-Wave, Zigbee and 433Mhz radios.

Networking

On the networking side, the new house is getting 3Gbs symmetric fiber Internet along with a backup 100Mbps/30Mbps HFC link. Both of those are then connected back to my datacenter infrastructure over Wireguard, letting me use BGP with my public IP allocation and ASN at home too.

I used the opportunity of setting up a new house to go for a decent amount of future proofing, by which I mean, building an overkill network… I installed a fiber patching box which gets the main internet fiber along with two pairs of singlemode fiber to each of the switches around the property, two outside and four inside the house. The fiber patching box then uses a pair of MTP fibers to carry all 24 or so fibers to the core switch over just two cables. Each switch gets a bonded 2x10Gbps link back to the core switch, so suffice to say, I’m not going to have a client saturate a link anytime soon!

All the switches are Mikrotik, with the core being a CRS326-24S+2Q+RM and the edge switches being a mix of netPower 16P and CRS326-24G-2S+RM depending on whether PoE is needed. I’m sticking to their RouterOS products as that then lets me handle configuration and backups through Ansible.

The wifi is a total of 7 Ubiquiti 6 APs, 3 U6-LR for the inside and 4 U6-Lite for the outside.

On the logical side of things, I’ve got separate VLANs and SSIDs for trusted clients, untrusted clients, IoT sensors and IoT cameras. With the last two of those getting no external access whatsoever.

This allows me to use just about any random brand camera or IoT device without fear of them dialing back home with all my data. The only real requirement for cameras is that they give me RTSP/RTMP.

Hardware

Now on the more home automation hardware side of things. As mentioned above, my home assistant setup can handle devices connected over Z-Wave, Zigbee, 433Mhz or accessible over the internal network.

In general, I’m a big proponent of having home automation be there to help those living in the house, it should never get in the way of normal interactions with the house. This mostly means, that every light switch is expected to function as a light switch, same goes for thermostats or anything else that’s visible to someone living in the space.

Here is an overview of what I ended up going with:

- Light switches (with neutral): Zooz ZEN77 dimmers (Z-Wave)

- Light switches (without neutral): Innoveli Blue Series dimmer (Zigbee)

- Baseboard thermostats: Stelpro STZW402WBPLUS (Z-Wave)

- Garage ceiling heater thermostat: Centralite 3000-W (Zigbee)

- Bathroom floor thermostat: Sinopé TH13000ZB (Zigbee)

- Concrete slab thermostat: Sinopé TH14000ZB (Zigbee)

- Pool pump control (smart switch): Zooz ZEN05 (Z-Wave)

- Pool heater control: Sinopé RM3250ZB (Zigbee)

- Door locks: Yale YRD156 (Z-Wave)

- Power panel metering: Aeon Labs ZW095 (Z-Wave)

- Air quality sensors: TuYA TS0601 (Zigbee)

- Door sensors: Third Reality 3RDS17BZ (Zigbee)

- Small appliance control: Sonoff S31ZB (Zigbee)

- Leak detectors: Third Reality 3RWS18BZ (Zigbee)

- Outdoor light sensors: Xiaomi GZCGQ11LM (Zigbee)

- Fridge and freezer sensors: AcuRite 06044M (433Mhz)

- Pool temperature sensor: InkBird IBS-P01R (433Mhz)

- Outdoor air temperature/humidity sensor: Hama Weather station (433Mhz)

- Outdoor rain meter: AcuRite 00899 (433Mhz)

- Vaccum cleaner: Roomba 960 (wifi)

- Car charger: OpenEVSE (wifi)

- Custom meters & controllers: ESP8266 boards (wifi)

- AC and fan control: Bestcon RM4C Mini (wifi)

- Indoor wireless cameras: Sonoff 1080P (wifi)

- Indoor wired cameras: Reolink RLC-520A (PoE)

- Outdoor IR cameras: CTVISION 5MP, Reolink 5MP, Reolink 4K, Veezoom 5MP (PoE)

- Outdoor non-IR cameras: Revotech Mini Camera (PoE)

- Cat feeder: Aqara Smart Pet Feeder C1 (Zigbee)

- Sound system: Sonos Arc & IKEA Symfonisk (wifi)

Note that this isn’t necessarily an endorsement for any of those products 🙂

For example, the cameras, I’ve been going through a variety of manufacturer, some more reliable than others, especially when it comes to water ingress…

Automation

All of those in Home Assistant allows for some pretty good automation, things like getting notified of the mail box being open, along with a photo of whoever was there at the time. Same goes for the immediate perimeter of the house when we’re not there, useful to monitor deliveries. It also is used to keep the house at a comfortable temperature year round without needlessly wasting energy heating unused rooms.

Our chicken coop is also fully automated, opening automatically in the morning, closing at night, sending a photo to confirm that the chicken is back in and keeping the chicken inside when it’s too cold outside, also turning on heat to the water dispenser to avoid it freezing over.

The swimming pool equipment that came with the house also didn’t allow any real automation, instead, we’re relying on relays/smart plugs to control it. The pool temperature sensor combined with the big relay controlling the thermopump allows for home assistant to act as a thermostat for the pool.

The smart plug controlling the pump allows for quite some energy savings by only filtering the pool for as long as is needed (based on usage and temperature).

And probably the most useful of all from a financial standpoint, support for automatically handling peak periods with the utility, during which time they credit money for any kWh of power saved compared to the same period of the previous days. Home assistant can simply pre-heat the house a bit ahead of time and then turn off just about anything for the peak period. Keeps things perfectly livable and saves a fair amount of money by the end of the season!

What’s next

In general, I’m very happy with how things stand now.

There are really only two things which aren’t controllable yet and which would be useful to be able to control, especially when the utility provides incentive to reduce power consumption. That’s the water heater and the air exchanger. For the water heater, Sinopé makes the perfect controller for it, just waiting for it to be more readily available. For the air exchanger, I’m yet to decide between trying to reverse engineer the control signal with an ESP8266 or going the lazy route and just use a controllable outlet and leave it in the same mode forever.

We’ve been getting a few power cuts and despite having 6U worth of UPS for my servers and the core network, it’s annoying to have the rest of the house lose power. To fix that, we got a second electrical panel installed for the critical loads which will hopefully soon be fed by a battery system.

Over the next year, I also expect the maple shack to get brought to a more livable state, with the current plan to relocate the servers and part of my office down to it. This will involve a fair bit of construction as well as running fiber and a beefier power cable down there but would provide for good home/work separation while still not having to drive anywhere 🙂

Github

Github Twitter

Twitter LinkedIn

LinkedIn Mastodon

Mastodon