This is the twelfth and last blog post in this series about LXD 2.0.

Introduction

This is finally it! The last blog post in this series of 12 that started almost a year ago.

If you followed the series from the beginning, you should have been using LXD for quite a bit of time now and be pretty familiar with its day to day operation and capabilities.

But what if something goes wrong? What can you do to track down the problem yourself? And if you can’t, what information should you record so that upstream can track down the problem?

And what if you want to fix issues yourself or help improve LXD by implementing the features you need? How do you build, test and contribute to the LXD code base?

Debugging LXD & filing bug reports

LXD log files

/var/log/lxd/lxd.log

This is the main LXD log file. To avoid filling up your disk very quickly, only log messages marked as INFO, WARNING or ERROR are recorded there by default. You can change that behavior by passing “–debug” to the LXD daemon.

/var/log/lxd/CONTAINER/lxc.conf

Whenever you start a container, this file is updated with the configuration that’s passed to LXC.

This shows exactly how the container will be configured, including all its devices, bind-mounts, …

/var/log/lxd/CONTAINER/forkexec.log

This file will contain errors coming from LXC when failing to execute a command.

It’s extremely rare for anything to end up in there as LXD usually handles errors much before that.

/var/log/lxd/CONTAINER/forkstart.log

This file will contain errors coming from LXC when starting the container.

It’s extremely rare for anything to end up in there as LXD usually handles errors much before that.

CRIU logs (for live migration)

If you are using CRIU for container live migration or live snapshotting there are additional log files recorded every time a CRIU dump is generated or a dump is restored.

Those logs can also be found in /var/log/lxd/CONTAINER/ and are timestamped so that you can find whichever matches your most recent attempt. They will contain a detailed record of everything that’s dumped and restored by CRIU and are far better for understanding a failure than the typical migration/snapshot error message.

LXD debug messages

As mentioned above, you can switch the daemon to doing debug logging with the –debug option.

An alternative to that is to connect to the daemon’s event interface which will show you all log entries, regardless of the configured log level (even works remotely).

An example for “lxc init ubuntu:16.04 xen” would be:

lxd.log:

INFO[02-24|18:14:09] Starting container action=start created=2017-02-24T23:11:45+0000 ephemeral=false name=xen stateful=false used=1970-01-01T00:00:00+0000

INFO[02-24|18:14:10] Started container action=start created=2017-02-24T23:11:45+0000 ephemeral=false name=xen stateful=false used=1970-01-01T00:00:00+0000

lxc monitor –type=logging:

metadata:

context: {}

level: dbug

message: 'New events listener: 9b725741-ffe7-4bfc-8d3e-fe620fc6e00a'

timestamp: 2017-02-24T18:14:01.025989062-05:00

type: logging

metadata:

context:

ip: '@'

method: GET

url: /1.0

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.341283344-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StorageCoreInit

timestamp: 2017-02-24T18:14:09.341536477-05:00

type: logging

metadata:

context:

ip: '@'

method: GET

url: /1.0/containers/xen

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.347709394-05:00

type: logging

metadata:

context:

ip: '@'

method: PUT

url: /1.0/containers/xen/state

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.357046302-05:00

type: logging

metadata:

context: {}

level: dbug

message: 'New task operation: 2e2cf904-c4c4-4693-881f-57897d602ad3'

timestamp: 2017-02-24T18:14:09.358387853-05:00

type: logging

metadata:

context: {}

level: dbug

message: 'Started task operation: 2e2cf904-c4c4-4693-881f-57897d602ad3'

timestamp: 2017-02-24T18:14:09.358578599-05:00

type: logging

metadata:

context:

ip: '@'

method: GET

url: /1.0/operations/2e2cf904-c4c4-4693-881f-57897d602ad3/wait

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.366213106-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StoragePoolInit

timestamp: 2017-02-24T18:14:09.369636451-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StoragePoolCheck

timestamp: 2017-02-24T18:14:09.369771164-05:00

type: logging

metadata:

context:

container: xen

driver: storage/zfs

level: dbug

message: ContainerMount

timestamp: 2017-02-24T18:14:09.424696767-05:00

type: logging

metadata:

context:

driver: storage/zfs

name: xen

level: dbug

message: ContainerUmount

timestamp: 2017-02-24T18:14:09.432723719-05:00

type: logging

metadata:

context:

container: xen

driver: storage/zfs

level: dbug

message: ContainerMount

timestamp: 2017-02-24T18:14:09.721067917-05:00

type: logging

metadata:

context:

action: start

created: 2017-02-24 23:11:45 +0000 UTC

ephemeral: "false"

name: xen

stateful: "false"

used: 1970-01-01 00:00:00 +0000 UTC

level: info

message: Starting container

timestamp: 2017-02-24T18:14:09.749808518-05:00

type: logging

metadata:

context:

ip: '@'

method: GET

url: /1.0

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.792551375-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StorageCoreInit

timestamp: 2017-02-24T18:14:09.792961032-05:00

type: logging

metadata:

context:

ip: '@'

method: GET

url: /internal/containers/23/onstart

level: dbug

message: handling

timestamp: 2017-02-24T18:14:09.800803501-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StoragePoolInit

timestamp: 2017-02-24T18:14:09.803190248-05:00

type: logging

metadata:

context:

driver: storage/zfs

level: dbug

message: StoragePoolCheck

timestamp: 2017-02-24T18:14:09.803251188-05:00

type: logging

metadata:

context:

container: xen

driver: storage/zfs

level: dbug

message: ContainerMount

timestamp: 2017-02-24T18:14:09.803306055-05:00

type: logging

metadata:

context: {}

level: dbug

message: 'Scheduler: container xen started: re-balancing'

timestamp: 2017-02-24T18:14:09.965080432-05:00

type: logging

metadata:

context:

action: start

created: 2017-02-24 23:11:45 +0000 UTC

ephemeral: "false"

name: xen

stateful: "false"

used: 1970-01-01 00:00:00 +0000 UTC

level: info

message: Started container

timestamp: 2017-02-24T18:14:10.162965059-05:00

type: logging

metadata:

context: {}

level: dbug

message: 'Success for task operation: 2e2cf904-c4c4-4693-881f-57897d602ad3'

timestamp: 2017-02-24T18:14:10.163072893-05:00

type: logging

The format from “lxc monitor” is a bit different from what you’d get in a log file where each entry is condense into a single line, but more importantly you see all those “level: dbug” entries

Where to report bugs

LXD bugs

The best place to report LXD bugs is upstream at https://github.com/lxc/lxd/issues.

Make sure to fill in everything in the bug reporting template as that information saves us a lot of back and forth to reproduce your environment.

Ubuntu bugs

If you find a problem with the Ubuntu package itself, failing to install, upgrade or remove. Or run into issues with the LXD init scripts. The best place to report such bugs is on Launchpad.

On an Ubuntu system, you can do so with: ubuntu-bug lxd

This will automatically include a number of log files and package information for us to look at.

CRIU bugs

Bugs that are related to CRIU which you can spot by the usually pretty visible CRIU error output should be reported on Launchpad with: ubuntu-bug criu

Do note that the use of CRIU through LXD is considered to be a beta feature and unless you are willing to pay for support through a support contract with Canonical, it may take a while before we get to look at your bug report.

Contributing to LXD

LXD is written in Go and hosted on Github.

We welcome external contributions of any size. There is no CLA or similar legal agreement to sign to contribute to LXD, just the usual Developer Certificate of Ownership (Signed-off-by: line).

We have a number of potential features listed on our issue tracker that can make good starting points for new contributors. It’s usually best to first file an issue before starting to work on code, just so everyone knows that you’re doing that work and so we can give some early feedback.

Building LXD from source

Upstream maintains up to date instructions here: https://github.com/lxc/lxd#building-from-source

You’ll want to fork the upstream repository on Github and then push your changes to your branch. We recommend rebasing on upstream LXD daily as we do tend to merge changes pretty regularly.

Running the testsuite

LXD maintains two sets of tests. Unit tests and integration tests. You can run all of them with:

sudo -E make check

To run the unit tests only, use:

sudo -E go test ./...

To run the integration tests, use:

cd test

sudo -E ./main.sh

That latter one supports quite a number of environment variables to test various storage backends, disable network tests, use a ramdisk or just tweak log output. Some of those are:

- LXD_BACKEND: One of “btrfs”, “dir”, “lvm” or “zfs” (defaults to “dir”)

Lets your run the whole testsuite with any of the LXD storage drivers.

- LXD_CONCURRENT: “true” or “false” (defaults to “false”)

This enables a few extra concurrency tests.

- LXD_DEBUG: “true” or “false” (defaults to “false”)

This will log all shell commands and run all LXD commands in debug mode.

- LXD_INSPECT: “true” or “false” (defaults to “false”)

This will cause the testsuite to hang on failure so you can inspect the environment.

- LXD_LOGS: A directory to dump all LXD log files into (defaults to “”)

The “logs” directory of all spawned LXD daemons will be copied over to this path.

- LXD_OFFLINE: “true” or “false” (defaults to “false”)

Disables any test which relies on outside network connectivity.

- LXD_TEST_IMAGE: path to a LXD image in the unified format (defaults to “”)

Lets you use a custom test image rather than the default minimal busybox image.

- LXD_TMPFS: “true” or “false” (defaults to “false”)

Runs the whole testsuite within a “tmpfs” mount, this can use quite a bit of memory but makes the testsuite significantly faster.

- LXD_VERBOSE: “true” or “false” (defaults to “false”)

A less extreme version of LXD_DEBUG. Shell commands are still logged but –debug isn’t passed to the LXC commands and the LXD daemon only runs with –verbose.

The testsuite will alert you to any missing dependency before it actually runs. A test run on a reasonably fast machine can be done under 10 minutes.

Sending your branch

Before sending a pull request, you’ll want to confirm that:

- Your branch has been rebased on the upstream branch

- All your commits messages include the “Signed-off-by: First Last <email>” line

- You’ve removed any temporary debugging code you may have used

- You’ve squashed related commits together to keep your branch easily reviewable

- The unit and integration tests all pass

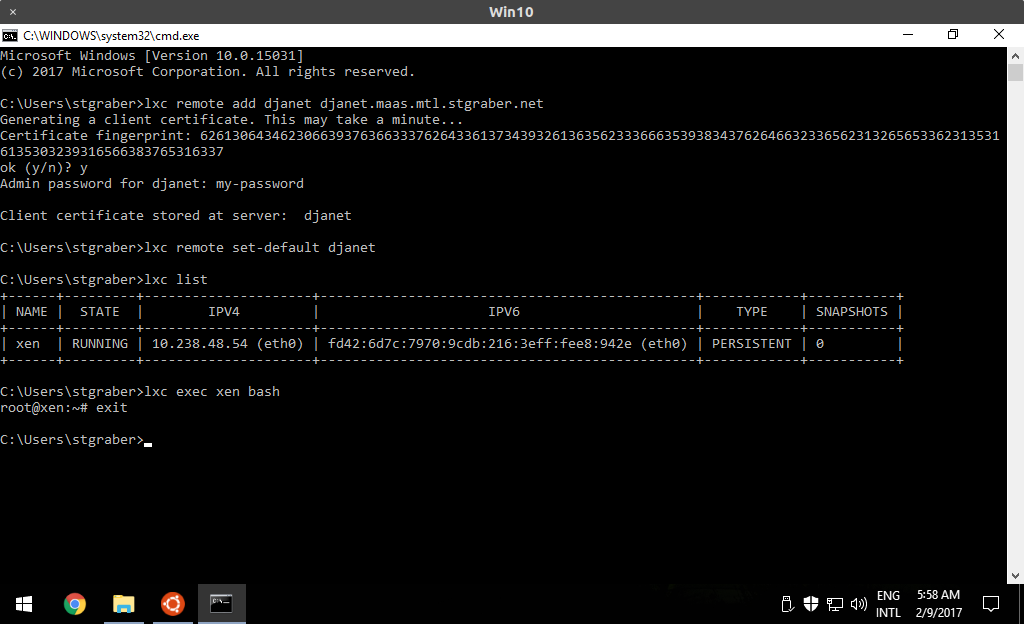

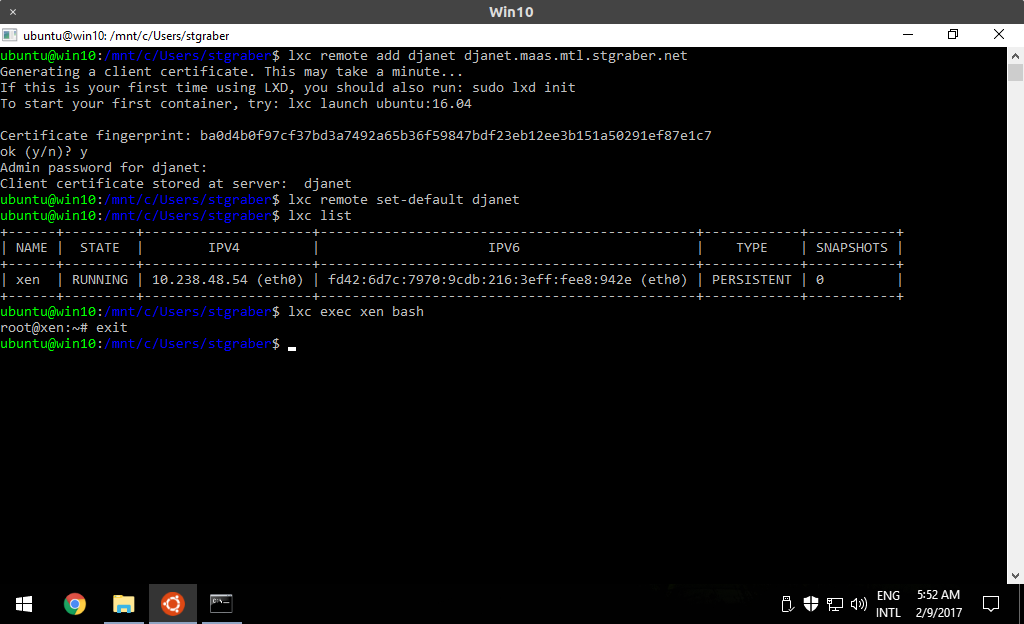

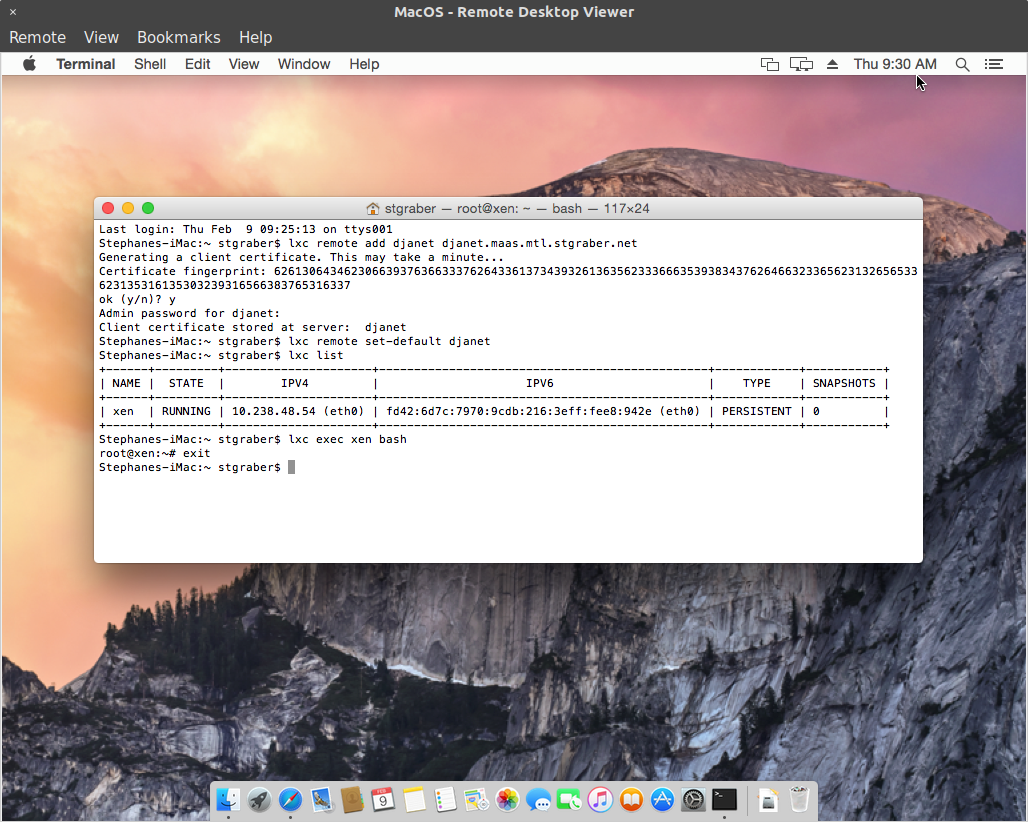

Once that’s all done, open a pull request on Github. Our Jenkins will validate that the commits are all signed-off, a test build on MacOS and Windows will automatically be performed and if things look good, we’ll trigger a full Jenkins test run that will test your branch on all storage backends, 32bit and 64bit and all the Go versions we care about.

This typically takes less than an hour to happen, assuming one of us is around to trigger Jenkins.

Once all the tests are done and we’re happy with the code itself, your branch will be merged into master and your code will be in the next LXD feature release. If the changes are suitable for the LXD stable-2.0 branch, we’ll backport them for you.

Conclusion

I hope this series of blog post has been helpful in understanding what LXD is and what it can do!

This series’ scope was limited to the LTS version of LXD (2.0.x) but we also do monthly feature releases for those who want the latest features. You can find a few other blog posts covering such features listed in the original LXD 2.0 series post.

Extra information

The main LXD website is at: https://linuxcontainers.org/lxd

Development happens on Github at: https://github.com/lxc/lxd

Mailing-list support happens on: https://lists.linuxcontainers.org

IRC support happens in: #lxcontainers on irc.freenode.net

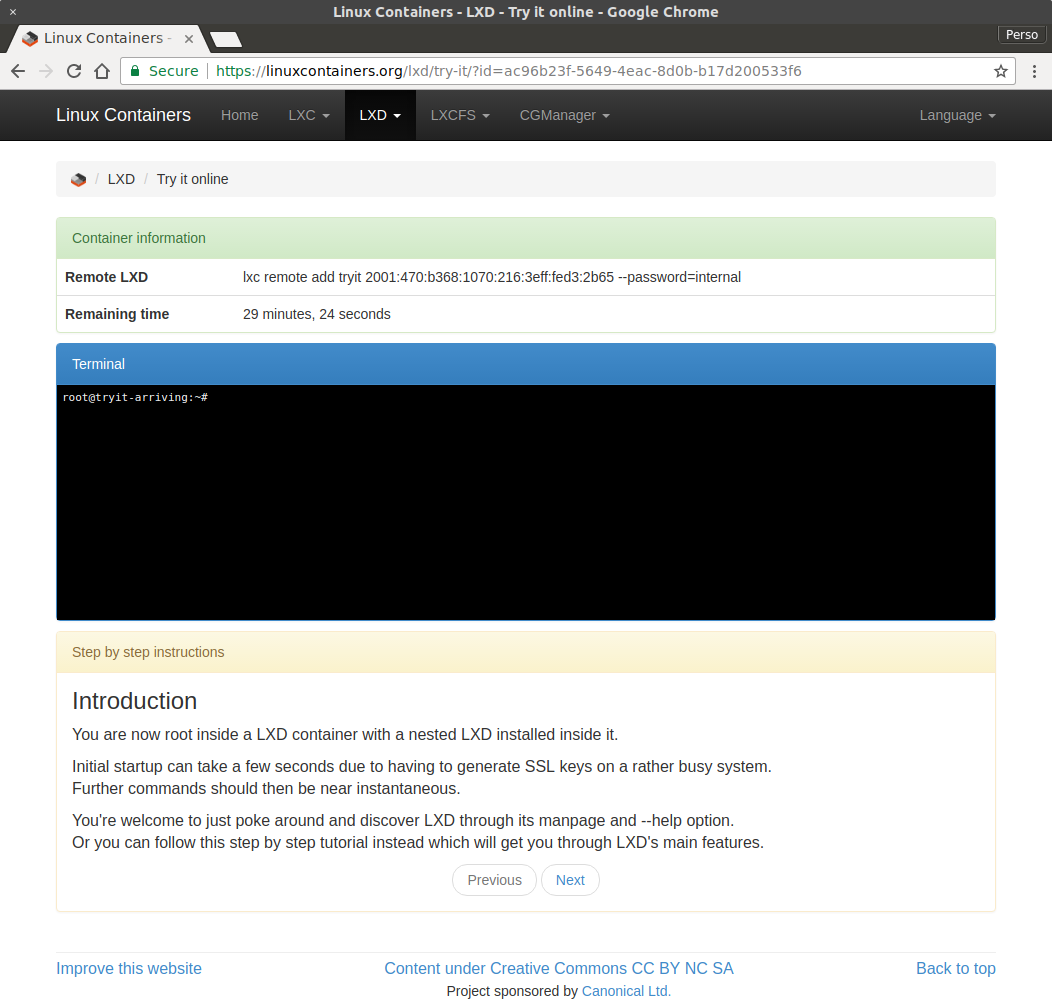

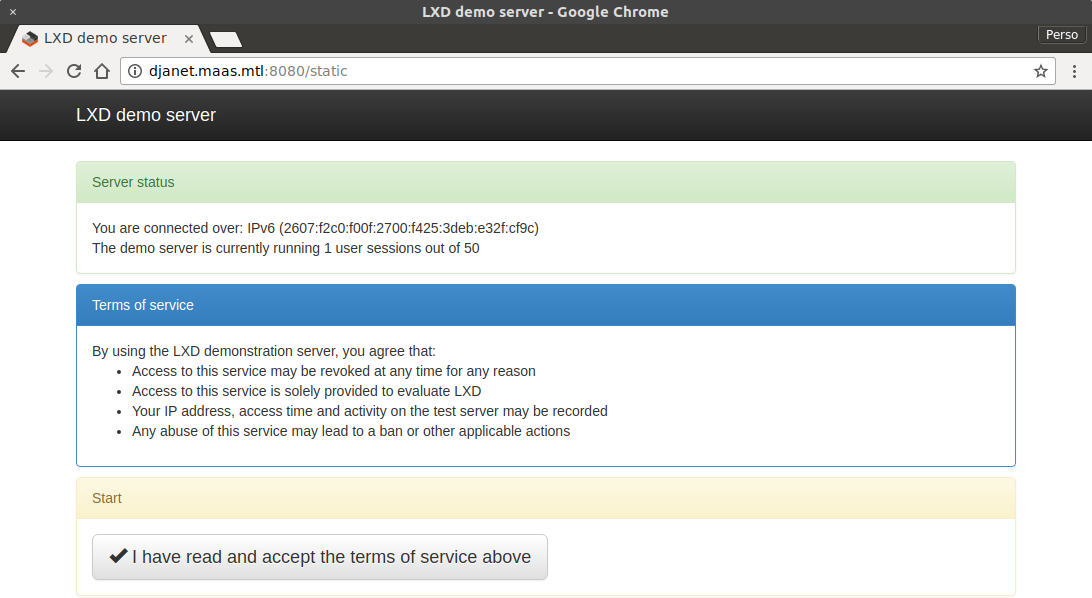

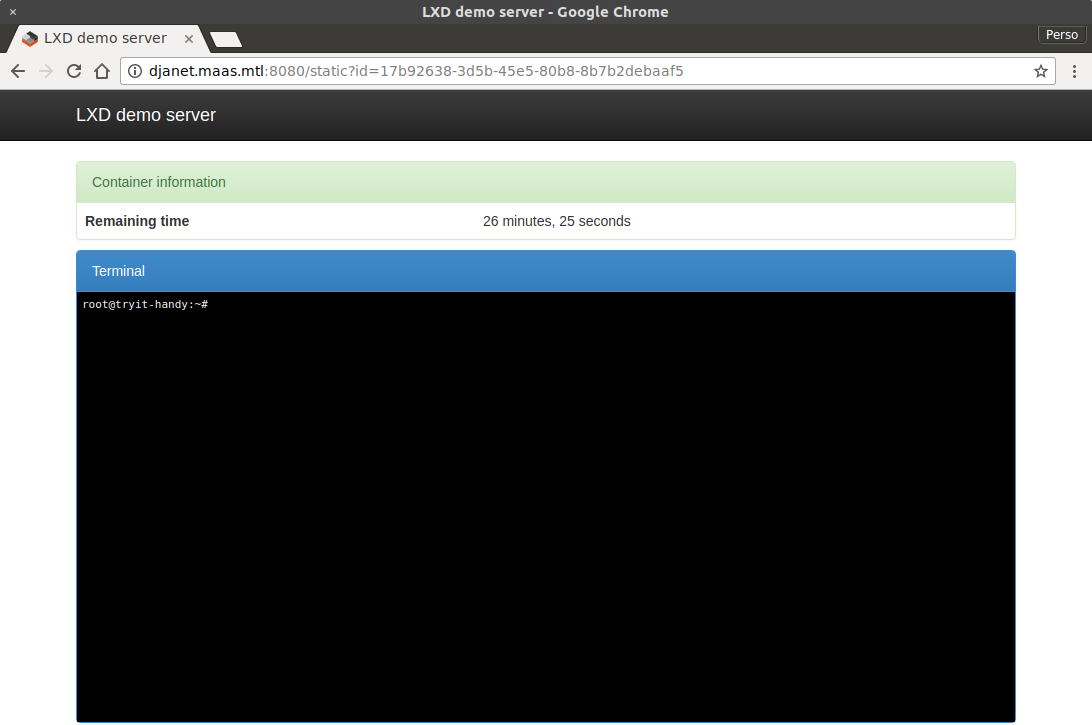

Try LXD online: https://linuxcontainers.org/lxd/try-it

Github

Github Twitter

Twitter LinkedIn

LinkedIn Mastodon

Mastodon